The University of Tasmania is investing in a new high-performance computer cluster (HPC) to meet the needs of a research thrust that is becoming increasingly data intensive.

The University of Tasmania is investing in a new high-performance computer cluster (HPC) to meet the needs of a research thrust that is becoming increasingly data intensive.

University Acting Deputy Vice-Chancellor (Research) Professor Clive Baldock said the investment – which will include hardware and data centre facilities – will strengthen the University’s research effort to solve urgent scientific problems in existing strengths, including climate and ocean sciences.

It also will enable the University to meet emerging demand in fields such as engineering, health and medical research, with a focus on genomics.

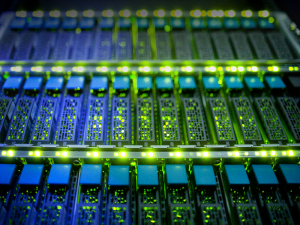

The HPC system will be housed in a new purpose-built research data centre at the University’s Sandy Bay Campus, with supplier Huawei to provide all equipment, racks, cables, and associated accessories.

The facility will support applications using more than 7,000 Central Processing Unit (CPU) cores, exposing researchers to the scaling problems of large CPU count jobs and providing them essential experience if they are wanting to migrate workloads to larger facilities such as the supercomputer at the National Computational Infrastructure (NCI) facility in Canberra.

“The traditionally complex HPC facilities will now be significantly more accessible to a wider group of researchers due to many new available technologies, such as hybrid HPC-cloud and next generation machine-learning applications,” Professor Baldock said.

This new HPC purchase is part of a rolling program by University of Tasmania to round out a portfolio of eResearch services providing cloud-compute, HPC-compute, and research data storage for Tasmanian researchers and their collaborators.

The overall design can accommodate a tripling in size of the HPC environment when required.

You must be logged in to post a comment.